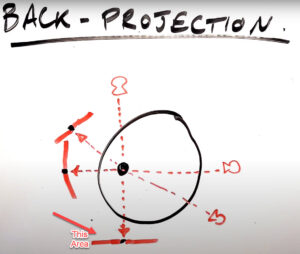

Image reconstruction is the process of turning raw data collected by the detectors into image data which can be viewed on a screen. both raw data and image data can be manipulated in different ways to create images with differing properties. the most rudimentary process of turning raw data into image data is called back projection.

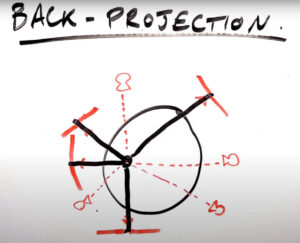

Back Projection

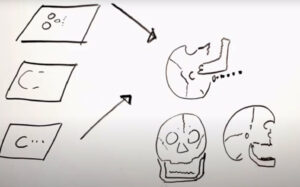

this is the process of mathematically mapping the attenuation pathway at every angle measured through a scan to locate where in a patient attenuation is occurring. if there is a dense object at this location in a patient then a projection at this angle will show us attenuation in this area because less photons will be arriving at the detector through this attenuation pathway.

when we apply that same principle from various angles through the patient we can map where that dense object is within the patient and generate a cross-sectional image that shows the object.

the star pattern you see here is a natural drawback of the back projection method but it’s reduced to an extent by the fact that we have many other angles showing where there is or isn’t attenuation.

the beam in this direction for example tells us that there is no dense object here.

this means that the more projection angles we have in our data set the greater our image quality will be. the relatively simple process known as back projection is now applied within another process called filtered back projection. all this means is that a filter is applied to all of the data that’s put through the back projection process.

a filter also referred to as a kernel or sometimes just as an algorithm is a form of data pre-processing and it’s an algorithmic manipulation which alters the value of a pixel and its neighboring pixels relative to one another. so for example if we want to see very sharp edges and details we would apply a sharpening filter also known as an edge enhancement or high pass filter and what that does is it drives the value of each pixel away from the value of its neighboring pixels.

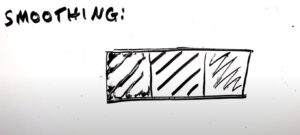

sharpening filter

so in this example we have an interface between a dark gray and a light gray pixel and we’re going to apply a sharpening filter and when that filter is applied to this middle pixel is going to take its neighbors and drive their values away from the average value of these three. so the dark pixel becomes darker and the light pixel becomes lighter.

Smooth filter

conversely if we use a smooth filter also known as a blurring or a low pass filter we’re going to do the opposite and make this sharp black white interface into a much smoother gray to gray transition. so we’re averaging this pixel out with the value of its neighbors. with filtered back projection we’re applying these mathematical transformations to our whole data set when reconstructing it so every pixel is individually going through some kind of algorithmic manipulation relative to its neighbors to either give us a sharper or a smoother set of images.

this has a direct impact on the properties of our image because a sharpening algorithm is going to give us higher spatial resolution and crisp sharp edges but it’s also going to make the noise more prominent whereas a smoother algorithm is going to blur and smooth out that noise but at the cost of also blurring out our nice sharp edges. so as with everything in CT there is this trade-off between whether we want to see sharp or smooth images and in some scans you’re going to reconstruct both sharp and smooth sets of data which can each be used to view different structures within one scan. for example, with a chest CT you’re going to reconstruct both lung and soft tissue data sets from your raw data the lung images will be reconstructed with a sharp filter so we can see small nodules and fine details in the lung tissue with high spatial resolution and the soft tissue images will be reconstructed with a smooth filter so we can look at the structures of the heart and the mediastinum with reduced noise and therefore with better contrast resolution. so two different filters are used and two different windows are used to view these two different reconstructions from one raw data set. beyond filtered back projection another method of reconstructing raw data into image data is called iterative reconstruction.

Iterative reconstruction

iterative reconstruction is a repetitive process which starts with a filtered back projection which is treated as an estimate of the true data set and then each data point in this estimate is compared to the scan data to see how close to the true value it is. this process happens in a cycle repeatedly until a certain predetermined number of iterations has happened and at that point the assumed image has then been repeatedly refined and is going to be very close to a true representation of the data. the advantage of iterative reconstruction is that both noise and certain artifacts in the image can be reduced because both of these things are non-true representations of what should be in the data set. so with every iteration we’re reducing these inconsistencies in our data. the increasing proliferation and effectiveness of iterative reconstruction algorithms has meant that we are able to reduce the radiation doses used in CT scanning because any form of noise reducing technology means we can settle for more noise in our data and then reduce that noise with the power of computing. so a lot of the dose reduction strategies which various vendors have developed recently in CT have been driven by improvements in iterative reconstruction software alongside a growth in computing power that has enabled these complex algorithms to run much more quickly.

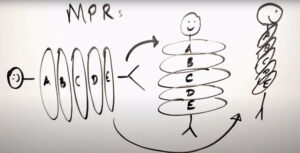

Multiplanar reformation (MPR)

After reconstructing our image data from the raw data we can apply several other manipulations to those images. Multi-planar reformats or MPRs are reconstructed in almost every CT scan performed today and what this means is simply putting our data together to view it from a different orientation. because a set of axial images is basically a lined up row of voxels displayed as pixels we can effectively stack all those slices on top of each other and view the voxels from any angle.

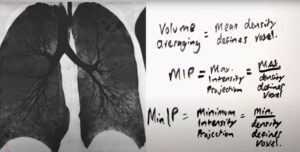

so we can manipulate those axial images to be viewed in the coronal plane or the sagittal plane or even an oblique plane through the body. in most scan protocols the scanner will have pre-loaded instructions to generate an axial image series from the raw data and then to generate MPR’s in the coronal and sagittal planes from that axial data if thick axial slices are used to reconstruct the MPR’s the coronal and sagittal images will have a jagged appearance known as a stair-step artefact at the interfaces of each of those slices. so routine practice is to reconstruct an axial data set in the thinnest possible slices from the raw data with overlap and then to use that thin data set solely for the purpose of generating MPR’s. when generating reconstructions in any plane from a thin set of image data. there are a few ways to render these slices. if for example we’re using a set of 0.5 millimeter slices to generate a three millimeter slice the simplest and most common way to render that three millimeter slice would be to take all those stacked 0.5 millimeter voxels and to average them to give us a value for the voxel represented by that three millimeter slice. this is how normal images are made and it’s referred to as volume averaging because you’re averaging all the values within that volume. this isn’t the only way to render that data however we could also take the maximum value represented within that three millimeter volume and take that maximum value to represent the whole voxel. this is called a maximum intensity projection or a MIP.

similarly, we could take the minimum value represented within this volume and take that to represent the whole voxel. This is called a minimum intensity projection.

while volume averaging is the most common mode of rendering image reconstructions MIPs and to a lesser extent minutes do have certain applications in which they can be useful. for example, if you’re looking for tiny lung nodules a MIP may be used to bring out and highlight nodules which are smaller than your two millimeter slice for example.

on the other hand, if you want to only look at the air space in the lung a minimum intensity projection will give you a very black image to see air spaces without obstruction from lung tissue which might otherwise occupy the same voxel.

3D reconstructions

the last manipulation to discuss is 3d reconstructions because a set of thinly stacked axial images is comprised of volumetric information we can use that volumetric information to generate a 3d image that represents the volume.

surface rendering techniques can be used to detect a continuous surface within a volume of data. volume rendering techniques can be used to render the full scope of 3d data and see various tissues in conjunction with one another.